Abstract

In the digital era, the internet and social media platforms have emerged as predominant channels for news production and distribution. This transition has led to a noticeable increase in the quantity and accessibility of news sources. While this democratisation of information is beneficial, it also presents challenges. Notably, the proliferation of false news and misinformation, which pose significant threat to societal stability and the public’s capacity to differentiate between reality and falsehood. This issue is particularly prevalent in Pidgin-speaking communities in West Africa, where reliance on social media for news is prevalent. Misinformation spreads widely in domains such as politics, health (e.g., herbal remedies, vaccines), and finance (e.g., Ponzi schemes, fraudulent business opportunities). Furthermore, the rise of misinformation through AI-generated multimedia content presents challenges in detection, as such content is inherently intricate to analyse using traditional fact-checking methods. To address these challenges, this study proposes a hybrid AI-driven fact-checking model that leverages graph neural networks (GNNs) and Transformer-based architectures to enhance misinformation detection across various modalities. The proposed system integrates Transformer models such as BERT and RoBERTa for textual misinformation analysis, a convolutional neural network (CNN)-based deepfake detection for multimedia verification, and GNNs for modelling misinformation propagation within social networks. By combining content analysis with network-based misinformation tracking, this research aims to develop a comprehensive and efficient fact-checking framework capable of addressing the evolving landscape of digital misinformation.

Introduction

The rapid spread of misinformation through social media platforms has raised concerns regarding its impact on public opinion. Along with the myriad benefits of this connectivity, e.g., the ability to share information instantaneously with a large audience, the spread of inaccurate and misleading information has emerged as a major problem. Misinformation spread via social media has far-reaching consequences, including the potential to create false narratives related to health (e.g., COVID-19 and vaccine misinformation), politics (e.g., election interference), and religion (e.g., inciting sectarian tensions), and these continue to cause widespread confusion, distrust, and in some cases, violence. While significant advances have been made in misinformation detection, the focus remains largely on monolingual high-resource contexts, with low-resource languages often overlooked, as the majority of research in this field has concentrated on the English language. The human-based fact-checking efforts currently in place are overwhelmed and often ineffective in identifying AI-generated misinformation, especially deepfakes and synthetic text, leaving vulnerable communities exposed to digital manipulation. Despite the prevalence of Pidgin English in West Africa, there is a noticeable lack of research and resources dedicated to this language variant.

For instance, during events like the Ebola outbreak, culturally ingrained misinformation, including conspiracy theories and false remedies, has had a detrimental impact on the public. These regions encounter barriers in accessing reliable fact-checking sources due to language barriers and limited digital literacy. Despite Pidgin being a widely spoken lingua franca, it is often disregarded in natural language processing models. Many studies rely on high-resource language datasets, leading to challenges in model performance when applied to low-resource languages due to differences in linguistic structures and cultural contexts. A significant portion of existing research focuses solely on textual data, neglecting the multimodal nature of misinformation, which often includes images, videos, and network propagation patterns. The amalgamation of Pidgin and English in content further complicates the identification of misinformation, exacerbated by the absence of advanced AI fact-checking tools tailored for effective Pidgin processing. Traditional verification methods encounter difficulties with low-resource languages, and existing automated systems exhibit bias towards high-resource languages, rendering them ineffective when applied to low-resource languages.

Addressing these challenges necessitates the development of hybrid, multimodal models that integrate textual analysis, multimedia content evaluation, and network propagation understanding, all tailored to the linguistic and cultural specifics of the target communities. This study proposes a hybrid, multimodal fact-checking framework that leverages the strengths of three AI paradigms: Transformers for textual misinformation detection, convolutional neural networks (CNNs) for multimedia analysis, and graph neural networks (GNNs) for modelling how misinformation spreads across digital platforms. The framework is specifically tailored to process content in Nigerian Pidgin and to identify misinformation in critical domains such as health, politics, and religion. This hybrid approach not only ensures content verification but also provides a deeper insight into the dissemination of misinformation within Pidgin-speaking online communities. Additionally, the model incorporates bias-mitigation strategies, including multilingual training datasets and fairness-aware machine learning techniques, to enhance fact-checking accuracy in marginalised regions of West Africa.

This study seeks to bridge the gap in misinformation detection for low-resource languages and marginalised communities by introducing a scalable, context-aware, and multimodal verification system. By enhancing fact-checking capabilities for both textual and multimedia content, the research will contribute to low-resource natural language processing, misinformation detection, and AI ethics, offering a more inclusive and culturally relevant approach to combating digital misinformation.

Objectives of the study

- Design and implement a hybrid artificial intelligence model that integrates Transformer-based architectures for textual analysis, convolutional neural networks (CNNs) for multimedia content evaluation, and graph neural networks (GNNs) for modelling misinformation propagation.

- Investigate how misinformation spreads differently across health, political, and religious domains within Pidgin-speaking communities. Utilise GNNs to model these dissemination patterns, providing insights into the dynamics of misinformation propagation in various contexts.

- Assess the influence of deploying an AI-driven fact-checking framework in Pidgin on public trust in information sources.

Research Questions

The following research questions have been created to guide the research.

- How can a hybrid AI model integrating Transformers, CNNs, and GNNs effectively detect and mitigate misinformation in Pidgin-speaking West African communities, particularly concerning health, politics, and religion?

- What are the unique linguistic and cultural challenges in processing Pidgin English content, and how can AI models be adapted to address these challenges in misinformation detection?

- How can bias mitigation techniques, such as multilingual training datasets and fairness-aware machine learning methods, enhance the accuracy and fairness of misinformation detection in marginalised regions?

- In what ways do the propagation of misinformation differ across health, political, and religious domains within Pidgin-speaking communities, and how can GNNs model these dissemination patterns?

- Will AI-driven fact-checking framework in Pidgin influence public trust in information propagated using this multi-lingual framework?

Literature Review

Accessing reliable fact-checking sources for low-resource languages like Nigerian Pidgin presents significant technical challenges. These challenges stem from data scarcity, linguistic complexities, and the lack of specialised tools tailored to these languages. However, recent advancements in natural language processing (NLP) and cross-lingual learning have proposed innovative solutions to address these barriers. This review explores the technical barriers and solutions, drawing insights from relevant research papers.

For instance, Mahl et al. (2024) address the imbalance in research focus on fact-checking initiatives primarily in the Global North, particularly the United States, by introducing a context-sensitive framework for analysing fact-checking cultures. The study highlights the need for broader comparative studies in fact-checking. Mridha et al. (2021) delve into the critical challenge faced by organisations in detecting fake news online and the efficacy of deep learning techniques in addressing this issue. Their review highlights the superiority of deep learning approaches such as Attention, Generative Adversarial Networks, and Bidirectional Encoder Representations for Transformers in fake news detection. By categorising and evaluating these advanced techniques, the paper emphasises the need for further research to enhance the accuracy and efficiency of fake news detection.

Školkay and Filin (2019) aimed to evaluate the effectiveness of artificial intelligence (AI) tools in combating misinformation by analysing and ranking existing AI-based solutions for fake news detection and fact-checking. Their research utilises a comparative analytics approach to assess the accuracy and comprehensiveness of these intelligent systems. Chaka (2022) delves into the realms of digital marginalisation, data marginalisation, and algorithmic exclusions within the context of the Global South. Chaka sheds light on how underrepresented users and communities face marginalisation and exclusion through the use of digital technologies, big data, and algorithms by various entities.

According to Wang, Zhang, and Rajtmajer (2023), low-resource languages (LRLs) like Pidgin face significant technical barriers, including limited annotated datasets, inadequate digital tools, and a lack of research attention. These challenges hinder effective misinformation detection and access to reliable fact-checking sources. Solutions include developing language-agnostic models that can operate across diverse linguistic contexts, improving data collection practices, and fostering interdisciplinary collaboration. Additionally, enhancing multilingual training can significantly boost detection performance, ensuring that LRLs are not overlooked in misinformation moderation efforts.

Though Lin et al. (2023) do not specifically address technical barriers and solutions for accessing reliable fact-checking sources for low-resource languages like Pidgin, it highlights challenges such as data scarcity and orthographic variation, which hinder effective spoken language processing. The proposed solutions include collecting a large-scale parallel English-Pidgin corpus and employing cross-lingual adaptive training to improve model performance, which could indirectly support better access to reliable information by enhancing language processing capabilities.

Methodology

- Data Collection: Gather Pidgin English content from social media platforms, news websites, and fact-checking organisations. Ensure the dataset includes examples from health, political, and religious contexts. Collect images and videos associated with the textual data, focusing on content that has been identified as misinformation or verified information.

Social Network Data: Map the dissemination of misinformation by collecting data on how information spreads within Pidgin-speaking online communities.

- Data Preprocessing: Normalise Pidgin English text, addressing issues like non-standard spelling and grammar. Implement tokenisation and remove noise to prepare the data for model training. Extract features from images and videos using CNNs to identify patterns indicative of misinformation, such as inconsistencies or manipulations.

Social Network Data: Construct graphs representing the spread of information, where nodes represent users or posts, and edges represent interactions or shares.

3. Model Development

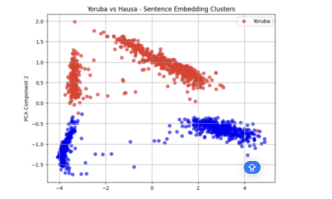

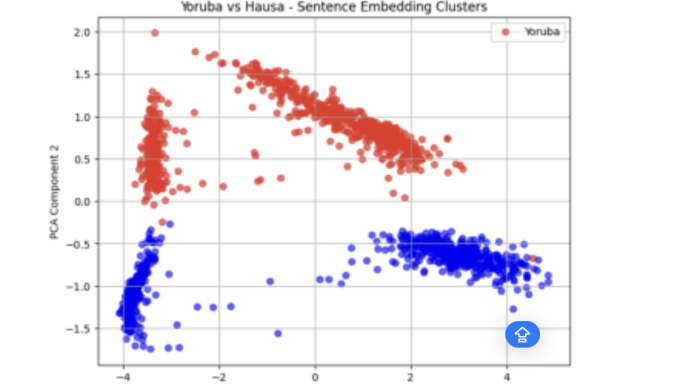

- Transformer Models: Fine-tune pre-trained models like BERT or RoBERTa on the Pidgin English dataset to capture contextual nuances in the text.

- CNNs for Multimedia Analysis: Train CNNs to detect anomalies or manipulations in images and videos that are commonly associated with misinformation.

- GNNs for Propagation Modelling: Utilise GNNs to analyse the structure of information dissemination networks, identifying patterns that are characteristic of misinformation spread.

- Hybrid Integration: Combine the outputs of the Transformer models, CNNs, and GNNs to create a comprehensive system capable of assessing the veracity of information across multiple modalities.

- Fairness-Aware Algorithms: Implement algorithms designed to detect and correct biases in model predictions, ensuring equitable performance across different demographic groups.

Evaluation

Assess model accuracy, precision, recall, and F1-score to evaluate effectiveness.

Accuracy: The accuracy of a classification model gives the fraction of the predictions predicted correctly by the model. It could also be expressed in percentage. Accuracy is simply defined as the ratio of the number of correct predictions to the total number of predictions. It is obtained with various positives and negatives in a confusion matrix.

Accuracy eqn 1

2. Precision: Precision is one indicator of a machine learning model’s performance—the quality of a positive prediction made by the model. Precision refers to the number of true positives divided by the total number of positive predictions (i.e., the number of true positives plus the number of false positives). Precision measures the number of positive classes that

were accurately predicted.

Precision = eqn 2

3. Recall: Whereas precision tries to obtain the correctly predicted positive class mathematically with a high degree of certainty. Recall does so too, but with a lesser degree of certainty. Recall measures the positive class prediction without much carefulness in the measurements.

Recall = (eqn 3)

4. F1 Score: The F1 score combines the precision and recall in order to measure the accuracy of the model. The F1 score is simply the harmonic mean of precision and recall. The F1 score is used to properly evaluate the performance of a model since the accuracy metrics cannot be used to evaluate an imbalanced model.

Mathematically;

f1_score = (eqn 4)

User Studies: Conduct surveys or interviews with members of Pidgin-speaking communities to evaluate the system’s impact on trust and information consumption behaviours.

Summary

In conclusion, this research presents a comprehensive hybrid AI framework that effectively addresses the multifaceted challenge of misinformation in Pidgin-speaking West African communities, particularly within the domains of health, politics, and religion. By integrating Transformer-based models for nuanced textual analysis, convolutional neural networks (CNNs) for multimedia content verification, and graph neural networks (GNNs) for modelling the propagation of misinformation across social networks, the proposed system offers a robust, multimodal approach to misinformation detection.

References

Adiba, F. I., Islam, T., Kaiser, M. S., Mahmud, M., & Rahman, M. A. (2020). Effect of Corpora on Classification of Fake News using Naive Bayes Classifier. International Journal of Automation, Artificial Intelligence and Machine Learning. https://doi.org/10.61797/ijaaiml.v1i1.45

Chaka, C. (2022). Digital marginalization, data marginalization, and algorithmic exclusions: a critical southern decolonial approach to datafication, algorithms, and digital citizenship from the Souths. JOURNAL of E-LEARNING and KNOWLEDGE SOCIETY, 18(3), 83–95. https://doi.org/10.20368/1971-8829/1135678

Gerber, D., Esteve, D., Lehmann, J., BBhmann, L., Usbeck, R., Ngonga Ngomo, A.-C., & Speck, R. (2015). DeFacto – Temporal and Multilingual Deep Fact Validation. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.3198925

Goyal, P., Taterh, Dr. S., & Saxena, Mr. A. (2021). Fake News Detection using Machine Learning: A Review. International Journal of Advanced Engineering, Management and Science, 7(3), 33–38. https://doi.org/10.22161/ijaems.73.6

Grönvall, J. (2023). Fact-checkers and the news media: A Nordic perspective on propaganda. Nordic Journal of Media Studies, 5(1), 134–153. https://doi.org/10.2478/njms-2023-0008

Gururaj, H. L., Lakshmi, H., Soundarya, B. C., Flammini, F., & Janhavi, V. (2022). Machine Learning-Based Approach for Fake News Detection. Journal of ICT Standardization. https://doi.org/10.13052/jicts2245-800x.1042

Lin, P.-J., Saeed, M., Chang, E., & Scholman, M. (2023). Low-Resource Cross-Lingual Adaptive Training for Nigerian Pidgin. ArXiv (Cornell University). https://doi.org/10.48550/arxiv.2307.00382

Mahl, D., Zeng, J., Schäfer, M. S., Fernando Antonio Egert, & Oliveira, T. (2024). “We Follow the Disinformation”: Conceptualizing and Analyzing Fact-Checking Cultures Across Countries. The International Journal of Press/Politics, 27(1). https://doi.org/10.1177/19401612241270004

Matúš Pikuliak, Srba, I., Moro, R., Hromadka, T., Timotej Smoleň, Melišek, M., … Bielikova, M. (2023). Multilingual Previously Fact-Checked Claim Retrieval. The International Journal of Press/Politics, 27. https://doi.org/10.18653/v1/2023.emnlp-main.1027

Monti, F., Frasca, F., Eynard, D., Mannion, D., Bronstein, M., Ai, F., & Lugano, U. (2019). Fake News Detection on Social Media using Geometric Deep Learning.

Mridha, M. F., Keya, A. J., Hamid, Md. A., Monowar, M. M., & Rahman, Md. S. (2021). A Comprehensive Review on Fake News Detection With Deep Learning. IEEE Access, 9, 156151–156170. https://doi.org/10.1109/access.2021.3129329

Peng, X., Xu, Q., Feng, Z., Zhao, H., Tan, L., Zhou, Y., … Doi. (n.d.). Automatic News Generation and Fact-Checking System Based on Language Processing.

Školkay, A., & Filin, J. (2019). A Comparison of Fake News Detecting and Fact-Checking AI Based Solutions. Studia Medioznawcze, 20(4), 365–383. https://doi.org/10.33077/uw.24511617.ms.2019.4.187

Wang, X., Zhang, W., & Rajtmajer, S. (2023). Monolingual and Multilingual Misinformation Detection for Low-Resource Languages: A Comprehensive Survey.

Zhou, J., Hu, H., Li, Z., Yu, K., & Chen, F. (2019). Physiological Indicators for User Trust in Machine Learning with Influence Enhanced Fact-Checking. Lecture Notes in Computer Science, 94–113. https://doi.org/10.1007/978-3-030-29726-8_7