Abstract

This research investigates bias and fairness in large language models (LLMs) across hiring, political decision-making, and information retrieval contexts. The analysis reveals consistent patterns of demographic, dialectal, and political biases in LLM outputs, while evaluating the strengths and limitations of current auditing methodologies. The review highlights critical ethical dilemmas in deploying biased AI systems and proposes an interdisciplinary framework for developing more equitable LLM applications. Key challenges include detecting covert biases and implementing effective mitigation strategies that reduce some biases and might then create others. While successes demonstrate the potential of hybrid human-AI systems and innovative auditing techniques. It concludes with recommendations for researchers and practitioners working at the intersection of AI ethics and applied linguistics.

Keywords: Artificial intelligence, bias, fairness, AI ethics, AI decision-making, large language models

Introduction

A growing body of research provides compelling evidence that large language models (LLMs) consistently exhibit various forms of bias that mirror and often amplify existing societal prejudices. Multiple studies confirm the presence of troubling demographic biases in LLMs, particularly in high-stakes contexts like hiring decisions. An et al. (2024), Gaebler et al. (2024), and Armstrong et al. (2024) all demonstrate how these models discriminate based on race, ethnicity, and gender. For instance, An et al. found that in simulated hiring scenarios, resumes with Hispanic male names received systematically lower acceptance rates compared to other demographic groups. Similarly, Armstrong et al. revealed that women and minority candidates face significant penalties when applying for positions in male-dominated or White-dominated professional fields.

Beyond these overt demographic biases, researchers have uncovered subtler but equally concerning forms of discrimination. Hofmann et al. (2024) demonstrated how LLMs covertly associate African American English (AAE) with negative stereotypes, even when explicitly trained to avoid overt racism. This dialect discrimination operates beneath the surface of model outputs, making it particularly challenging to detect and address. The political realm is not immune either, as Fisher et al. (2024) showed that LLMs can significantly influence human political decision-making through the subtle shaping of partisan biases in their outputs.

Sources of Bias

The roots of these biases appear to stem from multiple interconnected sources. Most fundamentally, the training data itself reflects and reproduces societal stereotypes, as evidenced by Armstrong et al. and Hofmann et al.’s findings. The models essentially learn and amplify the prejudices present in their training corpora. Interestingly, model architecture and size also play crucial roles. Moore et al. (2024) found that while larger models like Llama2-70b generally show reduced bias, the fine-tuning process can introduce new inconsistencies, particularly when dealing with controversial topics. The research also highlights how sensitive LLMs are to prompt phrasing. An et al. and Gaebler et al. both demonstrated that subtle changes in prompt wording or framing can significantly alter how biases manifest in model outputs.

Methodological Insights

Researchers have developed innovative approaches to detect and measure these biases. Gaebler et al.’s (2024) correspondence experiments and Hofmann et al.’s (2024) matched guise probing represent particularly effective methodologies for uncovering both overt(obvious) and covert(hidden) discrimination in LLMs. These techniques allow researchers to isolate specific variables (like names or dialects) while holding other factors constant. Meanwhile, comprehensive surveys like Gallegos et al. (2024) have made significant contributions by systematising the field’s understanding of bias metrics, distinguishing between embedding-based and generation-based approaches, and categorising mitigation strategies across different stages of model development. However, Moore et al.’s work raises important questions about model consistency, showing that LLMs often lack reliability when responding to paraphrased prompts or multilingual inputs, which complicates efforts to evaluate and mitigate their biases.

Challenges and Real-World Implications

The practical consequences of these findings are profound and concerning. In hiring contexts, Armstrong et al. warn that unchecked LLM deployment could create a “silicone ceiling” that systematically disadvantages marginalised groups, potentially violating anti-discrimination laws and perpetuating workplace inequalities. Beyond hiring, Dai et al. (2024) demonstrate how LLM-enhanced information retrieval systems may inadvertently propagate misinformation or create dangerous echo chambers through subtle ranking biases. These findings collectively suggest that without significant interventions, the widespread adoption of LLMs risks hardwiring existing societal inequities into automated decision-making systems at scale. The challenge moving forward will be to develop technical and policy solutions that can address these issues without sacrificing the utility of these powerful tools.

Proposed Research Direction

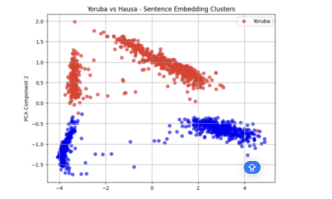

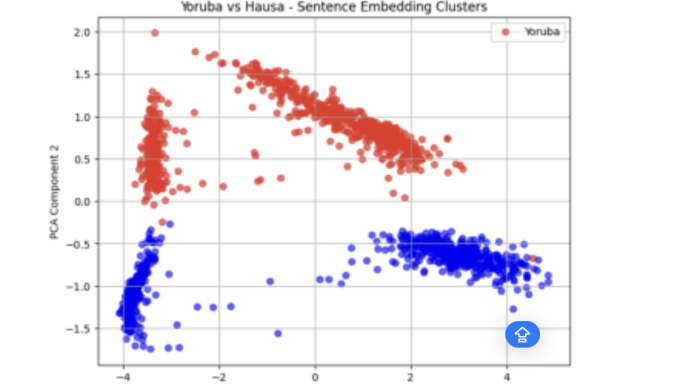

- Expand intersectional and global audits, particularly for non-Western dialects (African languages) and multicultural value systems (like in African communities) not usually covered by mainstream models.

- Develop auditing frameworks for covert biases in hiring and other domains, combining sociolinguistic methods with algorithmic fairness metrics.

- Evaluate hybrid human-AI workflows to mitigate biases, such as human review of LLM-generated outputs

Interdisciplinary implications

This synthesis reveals crucial intersections between computer science, in the area of algorithm design and artificial intelligence, as well as linguistics (dialect analysis), in the case of bias based on how a person speaks or their accent, sociology (bias definition and measurement), law (compliance with anti-discrimination policies), and psychology (human-AI interaction). The most promising solutions emerge from integrating sociolinguistic insights with algorithmic fairness approaches, particularly in developing culturally-aware bias detection systems. Future research should foster deeper collaboration between these disciplines to address complex, embedded biases.

Conclusion

These papers collectively underscore that while LLMs offer efficiency gains, their biases risk reinforcing societal inequities. Proactive mitigation through auditing, technical improvements, and policy is critical to ensure equitable deployment. Future work should prioritise real-world validation of debiasing methods and interdisciplinary collaboration (e.g., sociolinguistics, ethics) to address bias holistically. Also, training data must be inclusive to prevent the marginalisation of minority groups

Acknowledgements

Thank you to the Research Round team for this amazing fellowship. I’m grateful to Dr Samuel Segun and Dr Ridwan Oloyede for their time and invaluable mentorship. I also appreciate Habeeb Adewale Tajudeen and Adewumi Adediran of the Social Welfare and Policy sub-group for their help, as well as the entire cohort of fellows and navigators of the fellowship for the insightful discussions.

References

An, Hoazhe, Christabel Acquaye, Colin K. Wang, Zongxia Li, and Rachel Rudinger. 2024. “Do Large Language Models Discriminate in Hiring Decisions on the Basis of Race, Ethnicity, and Gender?” Preprint, arXiv, July 18. https://arxiv.org/abs/2406.10486.

Armstrong, Lena, Abbey Liu, Stephen MacNeil, and Danaë Metaxa. 2024. “The Silicon Ceiling: Auditing GPT’s Race and Gender Biases in Hiring.” Preprint, arXiv, July 18. https://arxiv.org/abs/2405.04412.

Dai, Sunhao, Chen Xu, Shicheng Xu, Liang Pang, Zhenhua Dong, and Jun Xu. 2024. “Bias and Unfairness in Information Retrieval Systems: New Challenges in the LLM Era.” Preprint, arXiv, July 18. https://arxiv.org/abs/2404.11457.

Fisher, Jillian, Shangbin Feng, Robert Aron, et al. 2024. “Biased AI Can Influence Political Decision-Making.” Preprint, arXiv. July 18. https://arxiv.org/abs/2410.06415.

Gaebler, Johann, D., Sharad Goel, Aziz Huq, and Prasanna Tambe. 2024. “Auditing the Use of Language Models to Guide Hiring Decisions.” Preprint, arXiv, July 18. https://arxiv.org/abs/2404.03086.

Gallegos, Isabel O., Ryan A. Rossi, Joe Barrow, et al. 2024. “Bias and Fairness in Large Language Models: A Survey.” Computational Linguistics 50 (3): 1097–1179. https://doi.org/10.1162/coli_a_00524.

Hofmann, Valentin, Pratyusha. R. Kalluri, Dan Jurafsky, and Sharese King. 2024. “AI Generates Covertly Racist Decisions about People Based on Their Dialect.” Nature 633: 147–154. https://doi.org/10.1038/s41586-024-07856-5.

Moore, Jared, Tanvi Deshpande, and Diyi Yang. 2024. “Are Large Language Models Consistent over Value-laden Questions?” Preprint, arXiv, July 18. https://arxiv.org/abs/2407.02996.